Can you recall the first time you saw and used ChatGPT, Midjourney, Copilot, or DALL-E? I bet it felt like a scene from some sci-fi movie! Words turning into code, text turning into art, and AI prompts building entire websites. Looking back, we now perceive all these AI solutions as something mundane. The advancements in machine learning, large language models (LLMs), and diffusion models have turned generative AI into an integral part of our work and everyday lives. However, there are some other pressing issues that arise. Have you already guessed what I’m talking about? Right, generative AI and security. No matter the solution, security has always been critical. AI development has taken it to another level.

Some essential data from Statista shows how widespread this topic is. Generally speaking, the global generative AI security market is predicted to reach $50,8 billion in 2026 and $133,8 billion by 2030. Impressive, right? The driving forces for such an interest in AI-powered tools for security are accelerated automation, predictive analysis, advanced threat detection, cost-efficiency, and other benefits.

I don’t want to disappoint you, but there’s a catch. The more we rely on AI solutions (in generating content, processing data, automating workflows, etc.), the bigger generative AI security risks become. Who is in control? Is the output real, reliable, or safe? What happens if malicious actions take place? How to handle AI threats?

All the above-mentioned concerns are justifiable. And that’s exactly what I’m going to expose in this article: challenges, principles, strategies, best practices, and future trends in genAI security to make your business safe, innovative, and responsible. And all at the same time!

Understanding Security Challenges in Generative AI

I don’t see a point in beating around the bush, so I’d like to start with the obvious. Generative AI is a complex tool that learns patterns from massive datasets, processes sensitive prompts, and often integrates deeply into company environments and workflows. It is brilliant and groundbreaking. However, there are some serious generative AI security risks that you should be aware of (and address, of course). Let’s discover.

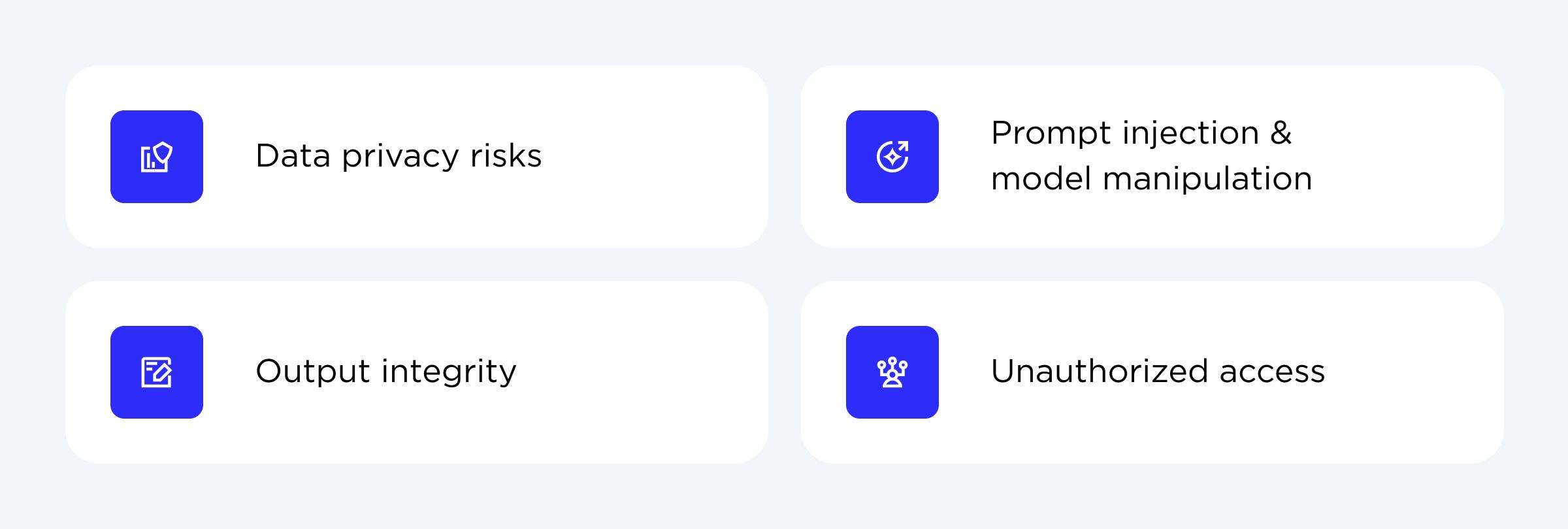

Data privacy risks

As I’ve already mentioned, every AI model learn from data. You might have already understood that this data often includes sensitive or proprietary information. When you start training a model on confidential project documents or personally identifiable information (PII) (names, emails, addresses, personal data, etc.), it is more than crucial to implement robust anonymization. Data security risk is real, and some info may resurface later (even if unintentionally revealed through the model’s outputs).

That is why you should be aware that even public models can leak private data if they have been trained on unfiltered internet data. The results? Privacy breaches, intellectual property exposure, and compliance issues.

Prompt injection and model manipulation

You should treat AI prompt injection as a form of social engineering for AI. How does it work? Basically, attackers create inputs that secretly change the model’s behavior. They can even force the system to reveal data, break the rules, or perform harmful actions.

Another risk is when someone intentionally corrupts training data. It causes data poisoning, and outputs may become biased. The result? The system becomes unreliable. This is a big risk and must be addressed, as it is like taking spoiled ingredients to make a meal and expecting it will taste fine.

Output integrity

I find this risk kind of “interesting” because it doesn’t require an attacker. It’s about AI hallucinations when it starts generating false information, unreal citations, and strange content. I think you’ve noticed such cases with ChatGPT. It’s like glitches.

But why is it dangerous? Some AI tools are created for reports, legal documents, or customer communication. Well, the risk of such hallucinations may lead to misinformation, plagiarism, or even copyright violations. The worst consequence is the loss of trust and reputation.

Unauthorized access

Not a secret that most generative AI security solutions are deployed via APIs or cloud providers. Where am I leading? This approach requires strong authentication. Unfortunately, attackers can take advantage of vulnerabilities, access training data, or misuse the model for malicious purposes. Be careful because such attacks sometimes result in the complete replication of the model.

Cloud security is a part of AI security, as every open endpoint is a potential door, and not every visitor is friendly, if you know what I mean.

Ready to discover how secure your gen AI is?

CONTACT USDiscovering Key Security Principles for Generative AI Systems

So, I’ve already outlined the key risks one can encounter. What about the key principles of generative AI security? Well, this is what you are about to discover. Let’s imagine them as four pillars that set the foundation for safe AI deployment.

Confidentiality

Speaking in theory, this is about protecting data in transit and at rest. How can you do that? I should mention the major methods, like as encrypting training datasets, securing all communication channels, and controlling (or limiting) who can access sensitive data (inputs and outputs). Data exposure can be reduced by privacy-preserving techniques like federated learning or differential privacy.

Integrity

There’s no denying that gen AI security solutions must ensure AI model outputs are accurate, trustworthy, and unaltered. Also, remember that all modifications (whether from retraining or external output) must be traceable. How do you follow this principle? If you are like me and like to be reliable and prevent silent tampering, you’ll involve logging, version control, and validation systems in your tools.

Availability

Does anyone like downtime? Of course, no. And let alone when it comes to AI applications and systems that drive vital operations. That is why it is predominant to create AI services that stay resilient and can withstand DDoS attacks or API overuse. Consistent performance and available services are possible with proper resource management and traffic throttling. Even under pressure, your system remains available.

Accountability

This is the most important part to mention. AI models don’t conduct decision-making by themselves. Why? Because humans own the outcomes. To maintain such a balance, there are several techniques to implement, including audit trails, access logs, and permission levels. This way, it is possible to know and track who accessed what, when, and how. And when something goes wrong, it is easy to tell who’s responsible (developers, providers, or users).

Defining Techniques for Securing Generative AI Models

Theory is great, but I like to believe that security lives in practice. Good news! There are generative AI security best practices to follow if your company wants to protect its AI pipelines. Let’s explore the key ones I’ve decided to highlight.

| Data encryption and anonymization | Secure training models | Access controls and authentication | Adversarial testing | Watermarking and content tracing |

| Encrypt training files, anonymize all personal data and identifiers, and use tokenization. This technique also ensures regulatory compliance. | Involve differential privacy (controlled noise to data) and federated learning (models learn without centralizing data). | Protect the model endpoint with strong authentication. Involve API keys, role-based access controls, multi-factor authentication, and zero-trust policies. | Conduct crash tests for AI models. Simulate various attacks (data corruption, prompt injections, bias exploitation, etc.) and define weak points. | Use invisible digital watermarks and/or cryptographic signatures. This way, you can see whether the content was generated by AI and by which system. |

| Note: even if some data is leaked, it can’t be traced back to users. | Note: They prevent data reconstruction or leakage. | Note: It eliminates accidental misuse and intentional tampering. | Note: Red team or ethical hackers often help with such testing. | Note: This technique helps prevent fraud and misinformation. |

Regulatory and Ethical Considerations

Even if I’ve already mentioned that, regulatory compliance for gen AI security is non-negotiable. Luckily for us, living in the 21st century, the legal and ethical landscape for this security is expanding and improving really fast. In other words, there are established frameworks that can regulate and handle generative AI and the way it works. Let’s explore.

Gen AI Security Regulations

EU AI Act

This is Europe’s first comprehensive regulation. It aims at defining risk levels and compliance requirements for AI systems.

NIST AI Risk Management Framework

This one is the U.S. guidelines for generative AI security. Its main focus is to boost trustworthy and secure AI development.

ISO/IEC 42001

Speaking of this one, I should say it’s a must to follow. It is a new international standard for AI management and governance.

You might be wondering, “But why does compliance matter so much?” I’ll try to put it briefly.

When you establish generative AI systems, they get access to and process huge amounts of data. This data is usually sensitive and requires robust protection. And it’s not just a checkbox. It’s about securing data from any misuse or loss of trust. Complying with above mentioned regulations, you prevent penalties, reputational damage, and biased outcomes. Moreover, your end users are sure your company values transparency, data security and privacy, and accountability.

Speaking of transparency, the ethical aspect is hard and fast. What is essential? There are a few things to consider: disclose when AI-generated content is used, avoid (or eliminate) biased datasets, and explain all processes of decision-making. What are the results? Users trust you more as they understand privacy rights and know that you care.

There are several strategies to follow that I’d like to share with you. Bear with me.

- Start building compliance into AI tools from the very beginning (not after deployment).

- Conduct regular AI model audits to detect any risk or bias.

- Implement frameworks like ISO/IEC 42001, EU AI Act, or NIST AI RMF for structure and generative AI security best practices.

- Be transparent and disclose whenever AI-generated content is used and how AI models process data.

- Create ethics boards that can take care of AI governance.

- Conduct regular training for your employees regarding legal updates and responsible AI use.

- Make AI-driven results explainable to your end users.

As you can see, AI security is about respecting data and people, combining legal and ethical considerations to keep the balance.

Ways to Build a Secure Generative AI Architecture

Gen AI security solutions don’t appear by accident. The process of designing these tools is about a comprehensive approach that covers the whole AI lifecycle. This approach is called security-by-design, and it implies embedding protection mechanisms into every layer of the AI (I mean from data ingestion to model training and deployment).

Let’s explore how that architecture typically looks.

| Security data layer | Model layer | Application layer | Monitoring layer |

| Encrypted storage, anonymization, and strict access policies. | Training in isolated environments with privacy-preserving techniques. | Role-based access control for all users and APIs. | Continuous audit logs, anomaly detection, and performance tracking. |

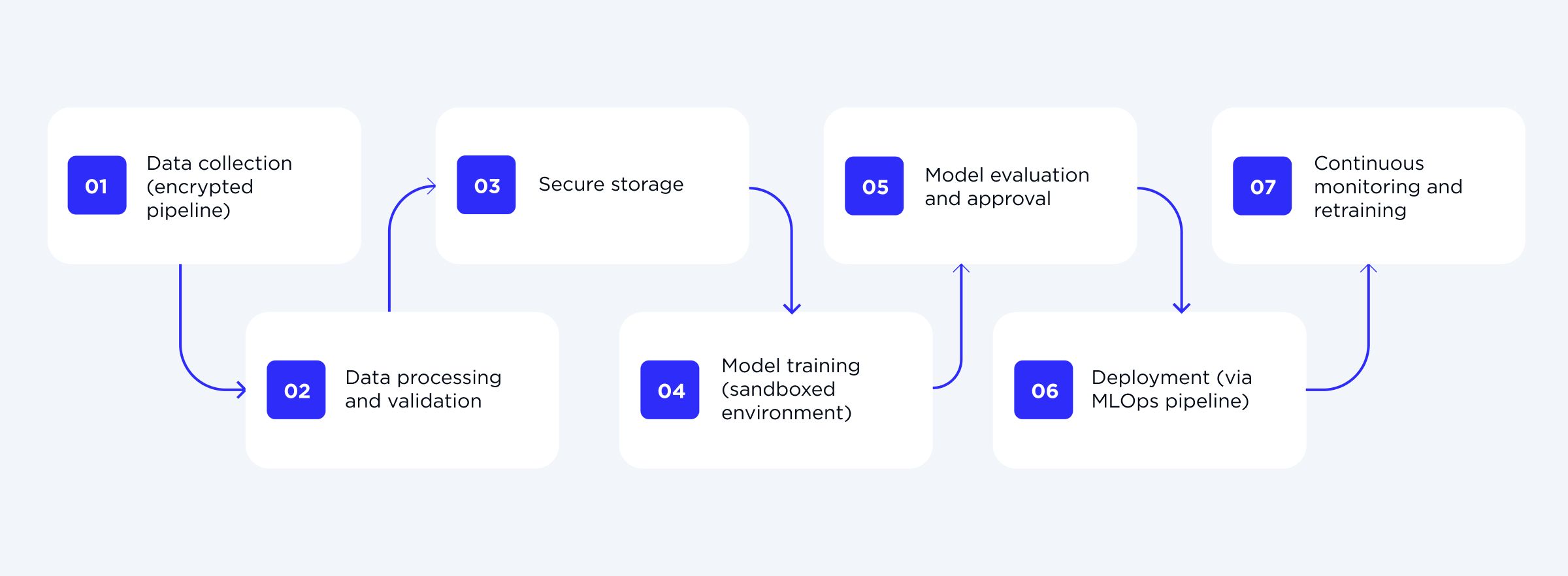

This approach of security at every layer ensures robust and reliable AI systems. However, cloud security plays a vital role as well. Consequently, cloud providers and MLOps pipelines are part and parcel of the entire workflow. What do they do? Establish an encrypted pipeline, automate compliance checks, manage model versions, and maintain safe environments for both retraining and deployment. How does the genAI workflow look with cloud security and MLOps reinforcement?

So, the result? Such a robust workflow not only generates valuable outputs but also respects privacy, integrity, and accountability. Building a solution is not so easy, but with the right team of specialists, you can easily get an efficient tool with profound generative AI in cybersecurity in mind.

AI Security: Future Trends To Expect

Looking back, I would say 2024 was the year of experimentation. How would I define 2025 and beyond? Well, these are the years of fortification. Since generative AI tools have become increasingly widespread, generative AI in cybersecurity can now be viewed as a “partner in crime”, not a liability.

The future definitely holds some innovations for us to embrace. What to expect?

- There are emerging techniques such as homomorphic encryption and secure multi-party computation. They can learn from sensitive data without even “reading” it. This level of processing, even of encrypted data, is a real breakthrough.

- Another innovation is zero-trust AI frameworks. This trend can completely change the way we perceive identity and access. The thing is, it assumes any actor (system or human) shouldn’t be trusted and verifies them continuously.

- AI detection is going even further, with it being able to defend itself from any threats. GenAI is and will be used in cybersecurity more and more to detect threats, predict vulnerabilities, and automate reactions to breaches.

- I’ve noticed a growing interest in model watermarking and federated learning. The first one is used to trace AI-generated outputs. The latter is for training models securely across distributed data sources.

- Last but not least is AI-driven red teaming. This is a gen AI security tool used to simulate attack testing led by AI agents. This approach really helps identify any weak spots before real jeopardizers do.

Exciting, right? I don’t have all the information (no one does). But future AI security will likely be self-monitored, self-healed, and adaptive. Moreover, it is possible AI can detect when it’s manimulated, update its own behavior, and build autonomous AI firewalls.

I’m really amused and can’t wait to see what the future has to offer. The one thing that is definite is that future AI security will be about resilience and intelligent defense.

Wrapping Up

As we witness the cutting-edge development of machine learning, large language models, and diffusion models, there’s no more denial that generative solutions have been shaping the way we work, innovate, and create. The major currency here is trust. So generative AI security is number one. I can say that without it, even the smartest systems risk turning against their own purpose.

There are challenges to consider, principles to follow, best practices to implement, regulations to comply with, and trends to expect. View it as a continuous process. Data protection, outputs verification, human oversight, and more. We’ve already passed beyond building smarter systems. This is the era of building safer ones!

I can say for sure that with our OTAKOYI team security-by-design approach is the only way forward amidst generative AI security risks. Are you ready to overcome these challenges and set off for sustainable and secure growth?