The development of AI is rapid and exhilarating. No matter the expertise, no one can actually tell you for sure what the future of artificial intelligence is. However, experienced AI experts will definitely tell you - to make the most of custom AI solutions now to be prepared for these fast-evolving changes in the future. Competition among enterprises is fierce and requires everyone to always stay up-to-date and implement the latest technologies to stay ahead. Recently, more and more organizations have been implementing KGs and LLMs to boost their data-driven decision-making, improve content creation and management, and optimize customer service. The question is, “Is it enough just to successfully implement these technologies at the enterprise level?” Our answer is, “Of course not.” It requires a clear understanding of both tools and how they can work together to deliver really meaningful insights and serve you well. Let’s find out how these futuristic tools can be game-changers for your enterprise.

What Are Large Language Models, Their Types And Features?

Before we discuss and explore LLMs and their types, let’s imagine a real-case scenario. You have a large retail company and manage its internal knowledge platform. Your goal is to facilitate employees with easier access to product details, inventory status, pricing changes, and customer reviews. How do LLMs work in this scenario? You can use this tool to help with common queries like, "Does this product come in other colors?" or "What’s the refund policy for this item?" What is more, LLMs can be trained on large datasets of conversational text and can even draft responses that sound natural and are always customer-friendly. The challenge is still there - if left unchecked, an LLM might start giving "creative" answers and misleading customers. The thing is, they are not designed to provide accurate facts unless they’ve been tied to specific, verified data sources. Hopefully, you’ve got the main idea behind it. Now, let’s go into specifics (and buckle up, we are going to continue the topic of this real-case scenario).

So, what are Large Language Models (LLMs)? These tools are changing the way we interact with technology. They understand and generate human language. You can imagine this technology as an assistant that is designed to tackle complex language tasks, including answering questions, creating content, and holding conversations.

But how do these models work? What makes each one unique? Here’s when we will break down the main types of LLMs and their strengths and weaknesses.

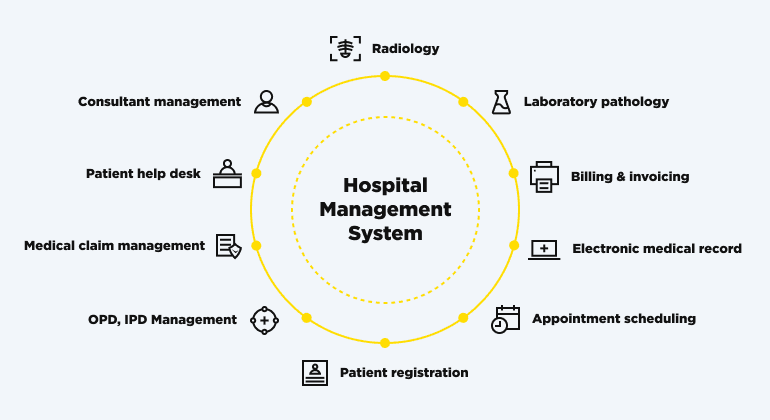

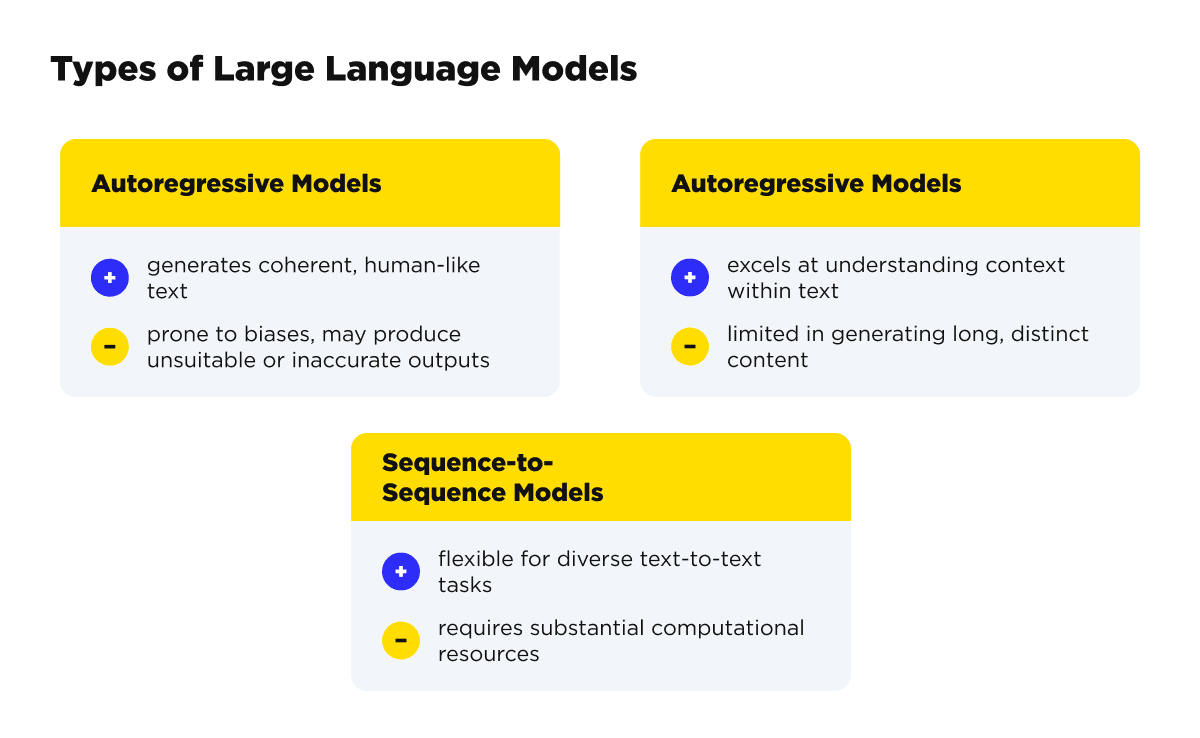

Discovering types of Large Language Models

Autoregressive models (such as GPT (Generative Pre-trained Transformer) models)

This type of LLM can create text by predicting the next token in a sequence based on the preceding tokens. This type includes smart language engines that are designed and trained on tons of text. They generate human-like responses and carry out a wide range of language tasks.

Strengths:

GPT models (the most popular example is the one developed by OpenAI) stand out by generating coherent and human-like text. They are suitable and helpful for different applications in chatbots, automated generation of content (if you do not require human-written text), and summarizing long documents (students do like this feature).

Weaknesses:

They are mostly trained on vast datasets, which may contain biases and misleading information. With no strict guardrails, GPT models may create outputs that aren’t enterprise-friendly and can even harm the business.

#2 Autoencoding models (such as BERT (Bidirectional Encoder Representations from Transformers) models)

These models can understand the context of words in a sentence by predicting masked tokens. Even with masked tokes, they can predict them by analyzing the surrounding context. In simple English, this model can “read” the text predicting the missing words in it. It can process various inputs in parallel.

Strengths:

BERT models are particularly good at understanding context as they look at language bidirectionally. You can utilize it for question-answering systems, intent recognition, and document classification. It is even used to help the Google search engine to distinguish the context of words in search queries.

Weaknesses:

Probably, BERT’s main limitation and weakness is its difficulty in generating long, distinct text. In other words, it is not the type of LLM to be used for language generation.

#3 Sequence-to-sequence (Seq2Seq) models (like T5 (Text-To-Text Transfer Transformer) models)

These models are aimed at tasks where the input and output are sequences. The examples are language translation, summarization, and text generation. They consist of an encoder and a decoder, where the first - processes the input and the latter - generates the output.

Strengths:

The best thing about T5 models is that they translate every NLP problem into a text-to-text format. It offers a lot of flexibility for a wide range of tasks.

Weaknesses:

The main downside is the significant computational resources Seq2Seq models require. They may not be the best fit for organizations with limited infrastructure.

How Do Knowledge Graphs Fit In And What Are Their Types?

Going back to our real-case scenario about the retail company and its internal platform. Here’s where a Knowledge Graph comes into play and adds some value. To enhance the output, the KG could represent each product as an entity, connecting it to accurate details about color options, stock status, and store locations. In this case, managers or customer service representatives could pull directly from the KG for fact-based responses. What is great about that? The KG provides reliable, structured, and up-to-date information.

In simple language, Knowledge Graphs represent relationships between entities and various data points (often visualized in nodes and edges). They are used for creating a graph database format that allows for easy and relevant information retrieval and offers a more intuitive approach to data management in general.

What are the main types of KGs for enterprises to consider? Well, we are just getting to that. Let’s find out!

What are the types of Knowledge Graphs?

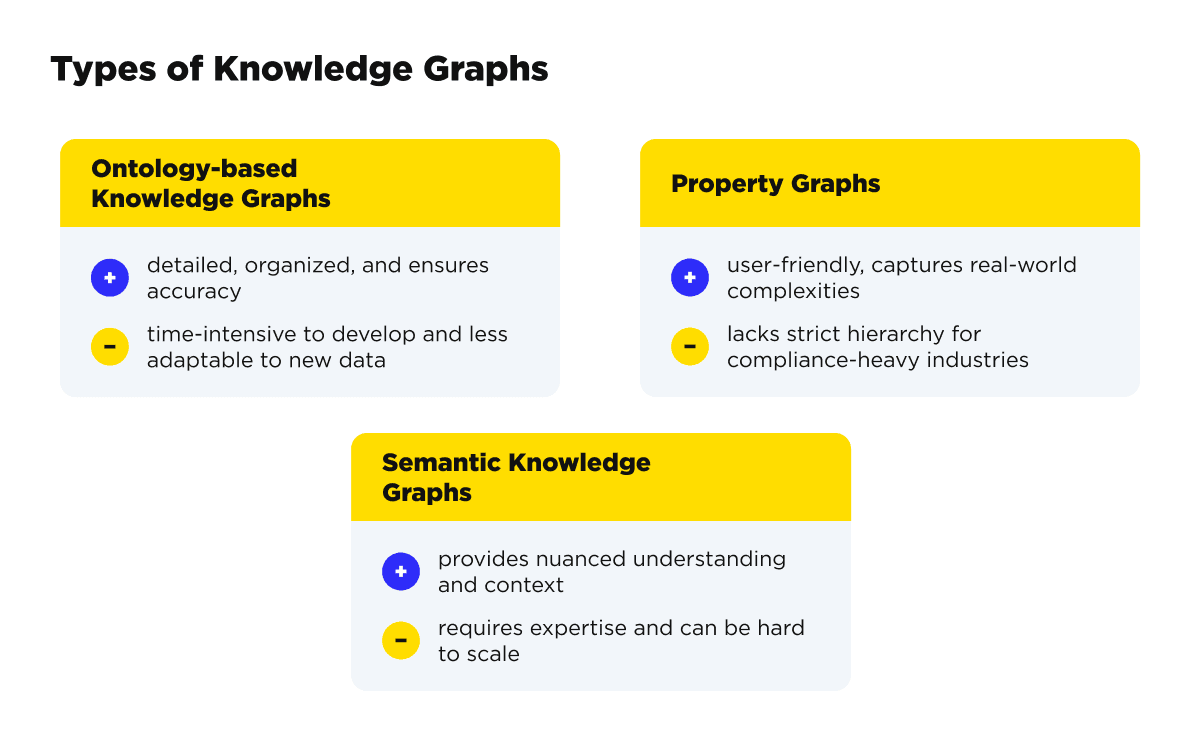

#1 Ontology-based Knowledge Graphs

This type encompasses highly structured and rule-driven networks that organize data by defining relationships and categories. It provides a detailed blueprint for all possible linking concepts. When your company represents a complex field, this is your to-go option to ensure precise connections and well-suited information.

Strengths:

These are highly organized graphs built on formal structures and ontologies. Their best benefits for enterprises include strict data management. Healthcare or finance might especially like exploiting this type.

Weaknesses:

Ontology-based graphs require intensive upfront development and are less flexible when adjusting to new data types. You should be ready for an extended delivery time.

#2 Property graphs

Property graphs are flexible neural networks where nodes and relationships can hold various attributes. They are perfect for capturing complex, real-world data with comprehensive details. You get a chance for customized labeling and properties. As a result, it leads to easy and intuitive navigation of interconnected data.

Strengths:

Property graphs are more flexible and user-friendly, making them ideal for social networks or recommendation systems that rely on varied and interconnected data.

Weaknesses:

They may lack the strict hierarchical structure needed in compliance-heavy industries, making them challenging to manage.

#3 Semantic Knowledge Graphs

Semantic knowledge graphs are designed to capture and represent the meaning behind data. They connect entities through logical relationships, providing structure, context, and better interpretation of complex data. It allows for discovering insights that rely on nuanced understanding and connections between different pieces of information.

Strengths:

Semantic graphs add meaning and context, making them useful for enhancing LLM outputs by providing context-specific insights.

Weaknesses:

They require specialized development expertise and can be challenging to scale across different languages and data types.

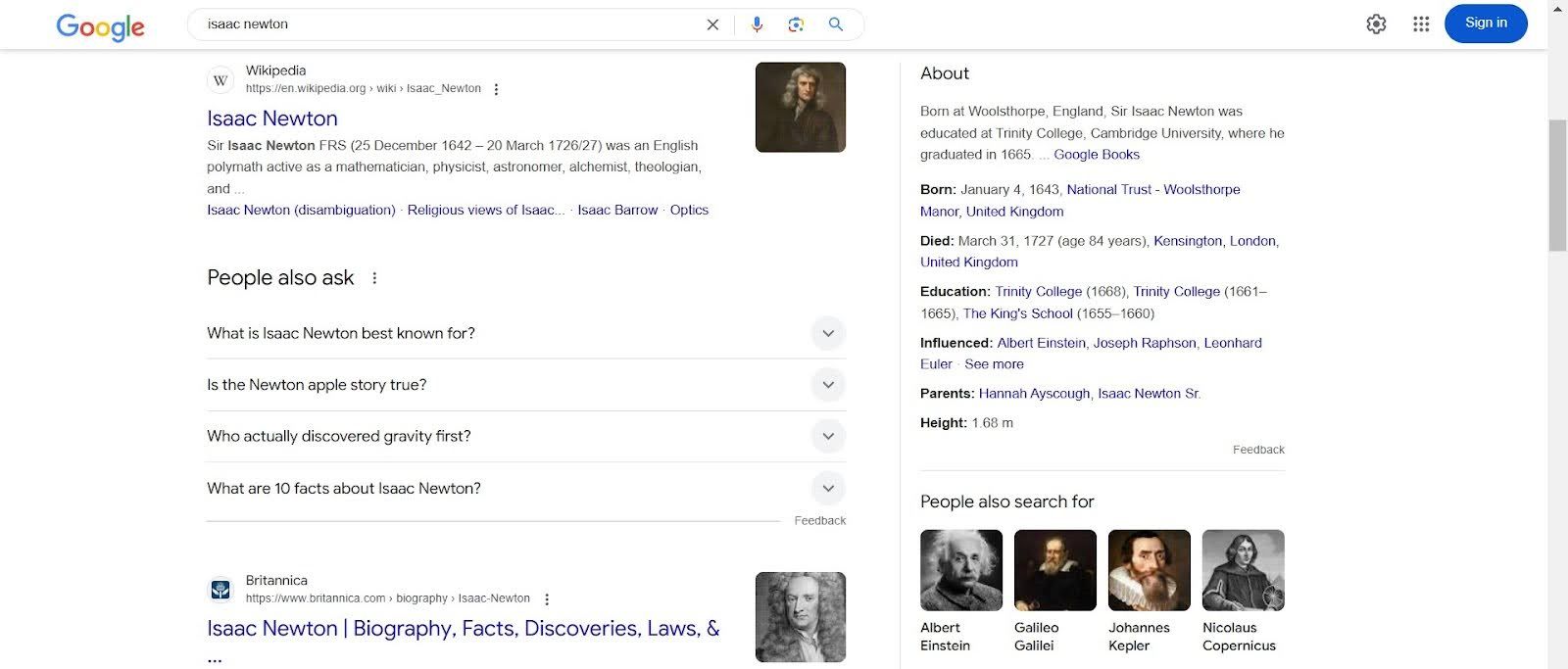

Example of knowledge graph-based knowledge panel used by Google:

Source: Google

As you can see by such a common example, a knowledge graph-based knowledge panel by Google displays a brief, fact-driven summary of key information about a person (be it a place or any topic) by connecting relevant entities and showing them in an organized, easy-to-read format.

But what happens when you use Large Language Models (LLMs) and Knowledge Graphs (KGs) together? This is the best part you are about to discover.

Reasons To Integrate a KG with Your Enterprise LLM

In previous paragraphs, we have discovered the real-case scenario and how LLMs and KGs may serve a large retail company. We have also discovered their types, strengths, and weaknesses. But what if these technologies work together? Integrating KGs and LLMs is actually a top-notch solution that helps cover each other’s weaknesses. Let’s go back to our real case scenario.

Let’s say a customer service rep enters a question about a specific product. The LLM can interpret the question and pull relevant context, such as "Is there a restock date for the blue jacket in the medium size?" The LLM drafts a response, while the KG verifies that the answer reflects the true status of the product. Instead of “unlocking” data in a purely linguistic way, the KG confirms factual details. It ensures employees get accurate and real-time information.

This combination leads to a win-win result. While the LLM keeps responses conversational, the KG supplies reliable facts. A lot of people rely on a digital assistant like ChatGPT to draft various pieces of text that still need fact-checking for accuracy. That is why an LLM can handle language, but it needs a KG to keep the answers grounded in truth.

As you can see, integrating LLMs with large-scale KGs can open new doors for your “business intelligence.” Let’s find out some key benefits both tools together may bring.

Improved reasoning and understanding

Knowledge graphs can serve as a factual foundation for large language models (LLMs), enriching them with verified data and leading to improved reasoning capabilities and contextual understanding. This integration helps LLMs respond to questions with higher accuracy and logical consistency, relying on structured and trustworthy information. For example, if a customer asks, "Can I open a joint account if I live in a different state?" the LLM can provide an accurate answer based on the verified data within the knowledge graph.

Consistent and validated knowledge

Enterprises can rely on knowledge graph constructions to verify and cross-check information generated by LLMs. it makes it possible to protect yourselves from any inaccuracies. This validation layer not only improves reliability but also supports regulatory compliance with industry standards and regulations. For instance, let’s take a pharmaceutical company. If the LLM mentions a drug's effectiveness, the knowledge graph validates whether that claim aligns with the latest clinical trial results.

Contextual and semantic accuracy

By combining semantic layers into a structured context, knowledge graphs allow LLMs to answer nuanced or complex questions with greater depth. This enrichment allows for responses that are semantically aligned, precise, and customized to meet the complexity of real-world inquiries. For example, a customer in an e-commerce store asks an LLM, “What is the return policy on products?” When the LLM doesn’t have the whole context, it might give a generic answer about returns. However, the integration of LLMs with KGs leads to a more detailed and contextually accurate inference.

Would you like to enjoy the benefits of LLMs and KGs combo?

Contact usHow Do Knowledge Graphs Boost LLMs in Enterprises?

As we have already mentioned, when LLMs access knowledge graph structure, they can produce answers that are more accurate, contextualized, and reliable. For instance, in healthcare, an LLM connected to a medical KG could provide more precise answers to questions about treatments and conditions by pulling information from only verified and industry-specific sources.

In the finance sector, for example, KGs help LLMs avoid risky recommendations by grounding responses in just factual financial knowledge and adhering to compliance requirements. Integrating KGs with LLMs allows businesses to ensure LLM outputs meet industry-specific accuracy standards.

What Impact Do LLMs Have On Knowledge Graphs in Enterprises?

However, don’t have the benefit of the doubt that LLMs don’t bring benefits for KGs. LLMs, in turn, offer advantages to Knowledge Graphs when conducting the implementation at the enterprise level. In simple language, they simplify user interactions with the data. By understanding user input in natural language, LLMs make it easier for non-technical users to interact with KGs and get relevant insights without needing any specialized query language skills.

For example, in customer service, LLMs can simplify the process of querying a KG, allowing representatives to access detailed information about products, services, or customer histories through simple language commands. This combination makes data management intuitive, giving KGs a user-friendly interface through natural language processing.

Discovering KGs and LLMs Integration Approaches

Depending on your enterprise's needs, there are several approaches to integrating KGs and LLMs effectively. Let’s discover them closer.

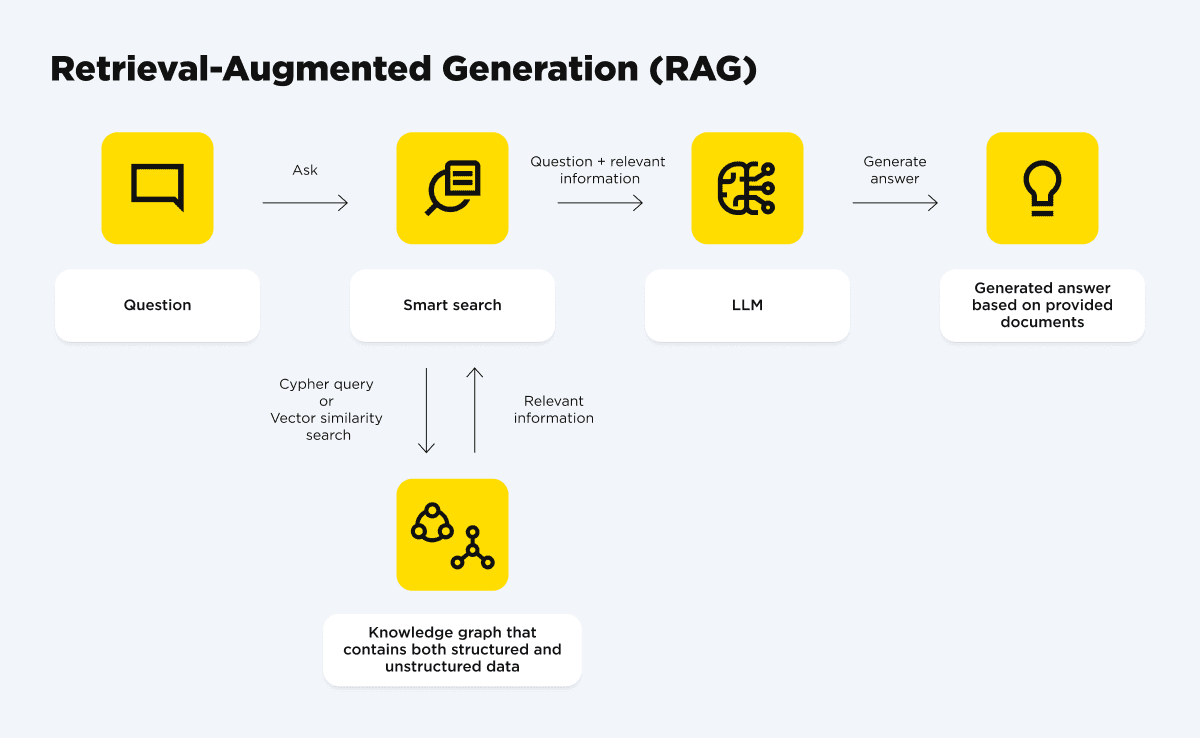

Retrieval-Augmented Generation (RAG)

RAG is a powerful approach that combines the capabilities of LLMs with the structured knowledge of KGs. In this method, LLMs generate responses by first retrieving relevant, fact-checked information from the knowledge graph (or other data sources) before generating the final answer by retrieval-augmented generation (RAG) applications. This ensures that the LLM’s output is grounded in real-world facts, increasing the accuracy and relevance of the information provided.

Knowledge-augmented language models

This approach involves incorporating knowledge graph data directly into LLMs. By embedding structured data from KGs, enterprises can empower LLMs with an in-depth language understanding of industry-specific information. This is a powerful method to use but can require plenty of resources.

Post-processing and validation

This approach provides for LLM outputs to be verified against a KG for accuracy before they’re sent to end-users. This one is less expensive and resource-demanding. It can be a great option for businesses prioritizing information accuracy and compliance, yet wanting to have a more budget approach.

Hybrid models

Hybrid models combine two previous approaches by embedding knowledge graph capabilities into LLMs while also validating outputs through post-processing. Hybrid models offer a balanced approach, allowing enterprises to benefit from accurate and contextually rich insights.

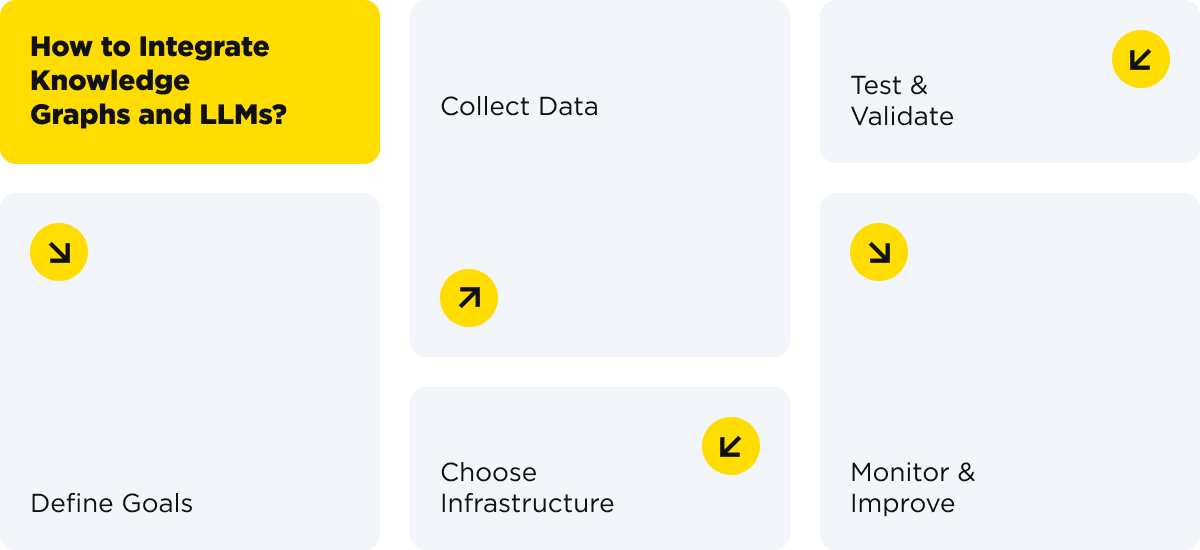

What Is The Process of Implementing Knowledge Graphs and LLMs Together at the Enterprise Level?

When you are to implement KGs and LLMs together, we recommend partnering up with a dedicated team like OTAKOYI to help you get started. We are not saying you can’t define your objectives. We’re implying that your business will benefit more when it’s completed by professionals.

The process of integrating KGs and LLMs includes:

#1 Defining data needs and business goals

Determine what types of knowledge your KG should contain and how the LLM will use this data. Identify key business areas where the combined solution will provide the most value.

#2 Collecting data and cleaning

Both KGs and LLMs rely heavily on accurate data. Ensure data quality by setting up processes for data collection, cleaning, and validation.

#3 Choosing a scalable infrastructure

Select tools and platforms that support both large-scale data processing and complex queries. Cloud solutions can be particularly helpful, as they offer scalability and flexibility.

#4 Testing and validating

Before launching, test the solution with real-world queries to ensure it meets your needs. Validate the responses for accuracy, relevance, and usability.

#5 Continuous monitoring and improving

Regularly update and refine your KG and LLM setup to reflect changes in data and business requirements.

Defining Common Industries and Use Cases

There are many real-world applications of KGs and LLMs working together. There are some most common industries where they are seen.

#1 Healthcare

Pharmaceutical companies use a KG combined with an LLM to manage and answer complex queries about drug interactions, enabling quick access to precise information. For example, Pfizer uses a KG and LLM combination to provide accurate responses on drug interactions and clinical trials quickly. As a result - researchers and healthcare professionals access up-to-date data, improving decision-making and patient safety.

#2 Financial services

Various financial institutions use KGs and LLMs to automate customer service, delivering precise and compliant responses in real time. For example, a popular company JP Morgan uses KGs and LLMs to automate customer service, answering queries related to loans and investments with full regulatory compliance.

#3 E-commerce

Retailers use this integration to personalize product recommendations based on customer profiles, recent purchases, and inventory data. We can provide many examples, but the most popular companies using this integration are Amazon and Walmart (both for personalization and management).

Ready to implement KG and LLMs for your business?

Contact usAre There Any Pitfalls And Considerations?

We’ve already mentioned some weaknesses of both tools. Integrating KGs and LLMs is complex and may present some pitfalls to overcome.

#1 Data privacy and security

Handling sensitive data requires strict adherence to security protocols to prevent and conduct fraud detection.

#2 Technical requirements

Both KGs and LLMs require high computational resources, especially during training and querying, which may raise costs.

#3 Bias and honesty

Ensuring fair, unbiased outputs is crucial, particularly when KGs and LLMs influence decision-making in areas like recruitment, lending/leasing, or law enforcement.

Wrapping Up

If you are a business owner looking for ways to leverage AI-driven solutions, integrating Knowledge Graphs and Large Language Models should be on your list! These technologies allow businesses to provide more accurate, context-aware, and reliable responses, enhancing both customer interactions and internal processes. Are you ready to make a game-changing decision for your company?